PUBLISHED

November 06, 2022

KARACHI:

In this digital era, it’s safe to say that parents are raising a generation of tech-savvy children. Everyone from toddlers to teenagers are using mobile phones and the internet. The schools are introducing various ways to stay connected to the world and stay up-to-date with the latest innovations and technology across the globe. Parents are left with no choice but to allow their children and teens to have mobile phones and the internet, as this lets them stay connected with their children and keep track of them.

When it comes to the safety of their children, parents are stuck between the pros and cons of giving their children a digital space that offers the best opportunity for them to learn and thrive while simultaneously also defining boundaries of this freedom. Most parents understand that limiting children’s access to the internet could adversely impact their ability to learn and develop. But this also means exposing children to online threats and content that may be harmful and potentially dangerous to them.

This is where online safety comes into play. Popular social media applications like TikTok, Facebook, Instagram, Whatsapp, Youtube, etc., have an age limit of 13 years, which means the average teenager is also exposed to potential digital or cyber threats. This calls for parents to check on their children without restricting their access to the internet or the applications that help them stay connected to the world.

Social media applications have created different features to enable parents to keep a check on their children, while some suggest that parents use parent-controlling applications. The barrier comes when their child believes these features will limit or restrict them to limited options.

Although online safety includes all online platforms, including browsers and games. We recently saw the case of Dua Zehra, who was contacted in an online game. The parents restricted her from playing that specific game, but then she shifted to other games. This incident highlights the importance of parents educating their child about online safety rather than setting limits alone.

Today we will be talking about social media applications, the most commonly used platforms by children. Talking about Pakistan, if we look at recent data, the cyber harassment helpline received 4,441 online harassment complaints in 2021. The majority of these complaints were from women. The most common platforms for online harassment are Instagram, Facebook and WhatsApp.

If we talk about the government, the Federal Investigation Agency (FIA) is currently the only platform dealing with cyber harassment cases. But this leaves a responsibility to the social media platforms to provide safety and gain the parents’ trust that the application they are using is a safe place to navigate.

In-app safety features

The application has been gaining rapid popularity since being launched in 2016 and now has more than 1 billion active users on the platform. The popular short-video-making platform TikTok has gained popularity worldwide and is also a trendy application in Pakistan.

Quick popularity means a continuous worrying state for parents, as they have to ensure that the application is safe as it is rated 12+ in app stores. This means a 13-year-old child or older can arguably be allowed to install and use the application. However, with limited access due to the safety protocols of the app. The app also allows parents to link the TikTok application on their phone with the child’s application with the help of the in-app feature called Family Pairing.

What are the things that worry them about their child? The amount of time they spend online, what content they are viewing, who they are talking to, what they are searching for, who is commenting or where they are commenting, or who can discover or possibly even target them.

TikTok’s family feature does however, solve at least some of these concerns. Family Pairing links a parent or guardian’s TikTok account to their teen’s, and once enabled, they will be able to manage their digital well-being, security and privacy features. The controls include:

- Screen Time Management: Parents can set how long their teen can spend on TikTok daily. They can choose between 40 to 120 minutes per day.

- Restricted Mode: This mode allows parents to limit the appearance of content that may not be appropriate for all audiences.

- Direct Messages: Parents can restrict who can send messages to the connected account or turn off direct messaging completely. With user safety in mind, we have many policies and controls in place for messaging already – for example, only approved followers can message each other, and we don’t allow images or videos to be sent in messages.

- Search: Decide whether your teen can search for content, users, hashtags, or sounds

- Comments: Decide who can comment on the teen’s videos (everyone, friends, no one)

- Discoverability: Decide whether your teen’s account is private (your teen decides who can see their content) or public (anyone can search and view content)

Jiagen Eep, leads Asia-Pacific’s Market Integrity and Enablement for Trust and Safety at TikTok, while talking to The Express Tribune about the teen’s online safety, said, “TikTok specifically is designed for users aged 13 and above, and we believe it is more important than ever for parents to be involved and aware of what their children are doing on digital devices. TikTok is growing exponentially across the region, so we encourage parents to be informed on what TikTok is and its key safety features like Family Pairing. More generally, we encourage parents to take an active role in their teen’s online experience by starting the conversation early about internet safety, online privacy, and the options available to them.”

He added that a parent’s guidance can be invaluable and recommended that the parents take advantage of the variety of privacy, safety and digital well-being features they’ve put in place to ensure a safe and positive experience on the application for their child.

Similarly, Meta, the parent company of Facebook, Instagram and Whatsapp, has similar options but doesn’t let the parent set options on the child’s app from their own. The options can be enabled from the child’s phone only. The platform has rolled out multiple directions for users to keep themselves safe from cyber threats. Still, the platform cannot control the users completely safely, as most online harassment cases reported are from these three platforms.

Educating parents and teens

The fear of restricting the child from learning and growing if kept away from social media apps is the most common among parents. Some parents believe that keeping them away from the internet or restricting them from using the application will keep them safe, while some parents want their children to learn, explore the world and stay safe at the same time. Both types of parents are primarily fearful because they are not aware of the online options that are available that will help them in keep their teen safe.

Jiagen believes parenting a teen’s digital life can be daunting, and introducing new technologies continues to shift our culture and the way we parent our children. “Just like we did when television or video games were introduced, it’s important that we again find the right balance between safety and autonomy. Parents and guardians must talk regularly with their kids and teens about their digital lives. Teens appreciate being heard, so offer them a safe space to ask questions and share their social media experiences. Making it normal to talk about social media can help build trust and openness within families. Our Safety Center for Parents offers resources to help families have a productive dialogue with their teens about how to be responsible and safe in all online activities,” he said.

Some of the guidelines that the platform suggests that parents do include the following;

- Starting conversations early: The parents and caregivers are encouraged to take an active role in their teen’s online experience and to start the conversation about internet safety, privacy, and other options.

- Setting common sense rules: Parents should talk to their children about common sense rules they would want to adopt on TikTok (i.e. no talking to strangers, limiting screen time).

- Meeting teens where they are: There can be moments when things happen on social platforms, and our teens need to learn how to defend themselves from predatory behaviour online. Parents need to engage them at the level they understand.

- Encouraging kindness and good online etiquette: Parents should talk to their teens about being responsible with their digital identity.

- Using tools to manage their online experience: As young people build an online presence, it’s vital to give families tools so parents and teens can set guardrails together.

TikTok has partnered with NGOs like Zindagi Trust to educate parents and teens about digital well-being. They have held multiple workshops in the schools where the parents were taught how to keep their children safe while the children were also educated about using the application safely.

One of the parents, Shivaji, who was concerned about their child’s safety and used to restrict them from using any of the social media platforms, told The Express Tribune that after learning about what dangers are present online and how we can keep our child safe, they now understand that the platforms are not wrong but the way that the users use it matters.

“I was not aware of the various options that are present to keep our children safe, following which I used to stop my kids from using the internet and social media apps. The kids used to complain about restricting them, but I was more concerned about their safety. But now, after learning how I, as a parent, can keep a check on their activity, I think we can make the digital space safe for them,” said the father of a seven grader.

Jiagen, while talking about the parents who are on the fence about their teen or themselves joining the platform, said that being on the wall is normal and healthy scepticism is okay. “Every time our kids engage in something new, it’s natural for us to get nervous. As a parent or guardian, you’re best positioned to know what is appropriate for your teen, and you have the power to keep them safe on TikTok. It’s important to nurture our teens to help them make autonomous decisions – to be free-thinking people. As parents and guardians, we need to have faith in their judgement and ensure they have a positive and healthy online experience,” he said.

Apart from this, as most people start their digital journey at age 13, this is why social media platforms set the accounts of users aged 13 to 15 to private by default. This means only the people they allow to follow them will be able to interact with or discover them in search. The platforms regularly keep a check on the accounts, and if they feel the accounts are underage, then they are removed.

“Kids are quite clever when it comes to working around technology parameters. And as much as we admire their ingenuity and creativity, we delete accounts when we become aware that a user is under 13. TikTok does not allow accounts to change their birthdays,” said Jaigen.

The platform also has a dedicated “Guardian’s Guide” page within Safety Center which offers more information and resources to assist parents in reviewing and adjusting their teenagers’ privacy settings.

The expanded Community Guidelines define a common code of conduct for the platform to provide a positive online environment for creative self-expression while remaining safe, diverse, and authentic. TikTok’s guidelines help users understand when and why certain restrictions are placed and shed light on the content and behaviour that isn’t allowed on the platform.

Moderators and proactive support

Social media platforms have artificial intelligence and human moderators to moderate the content being uploaded to the platform. As a result, they end up taking down several accounts, videos, and posts that go against the community guidelines.

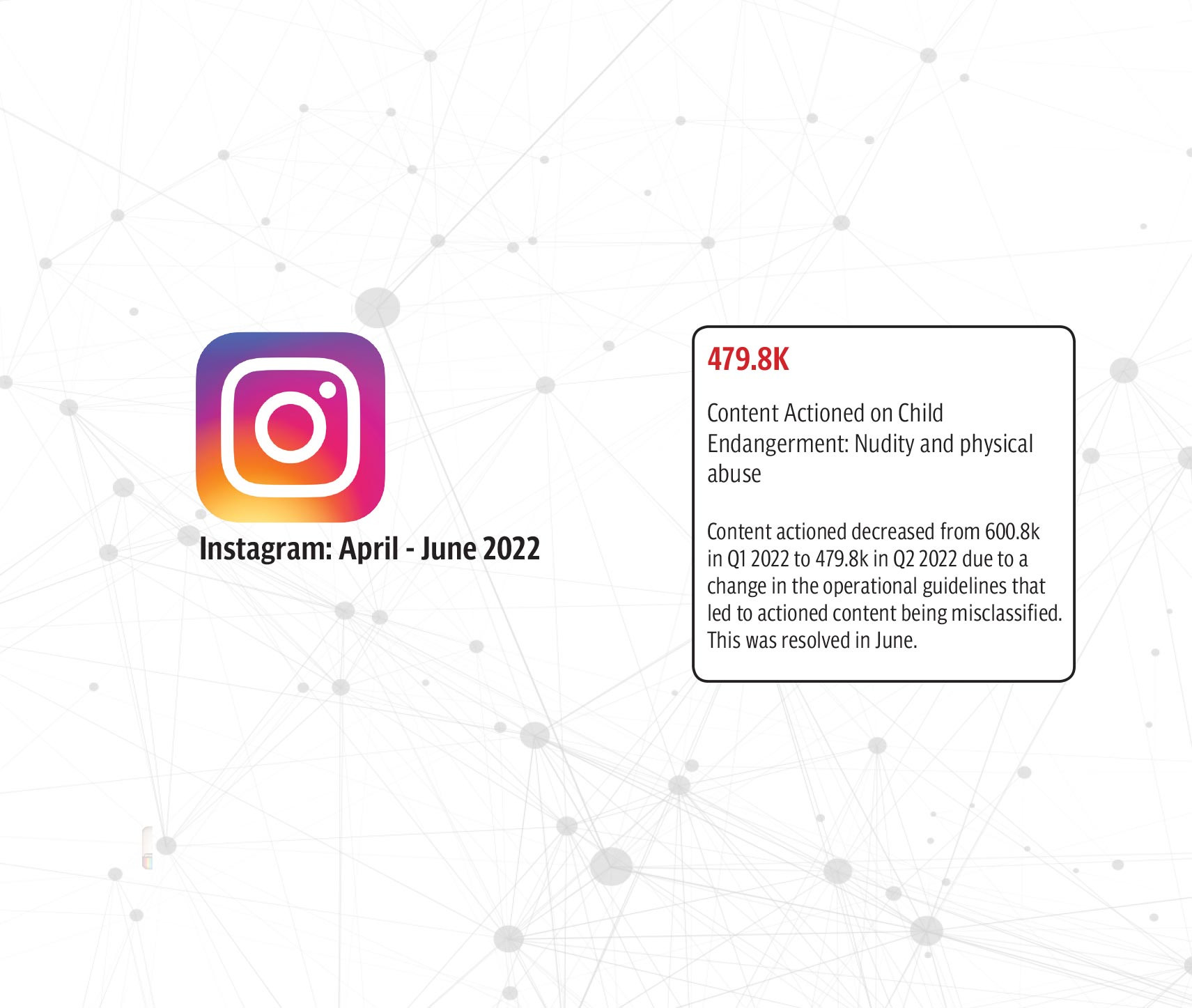

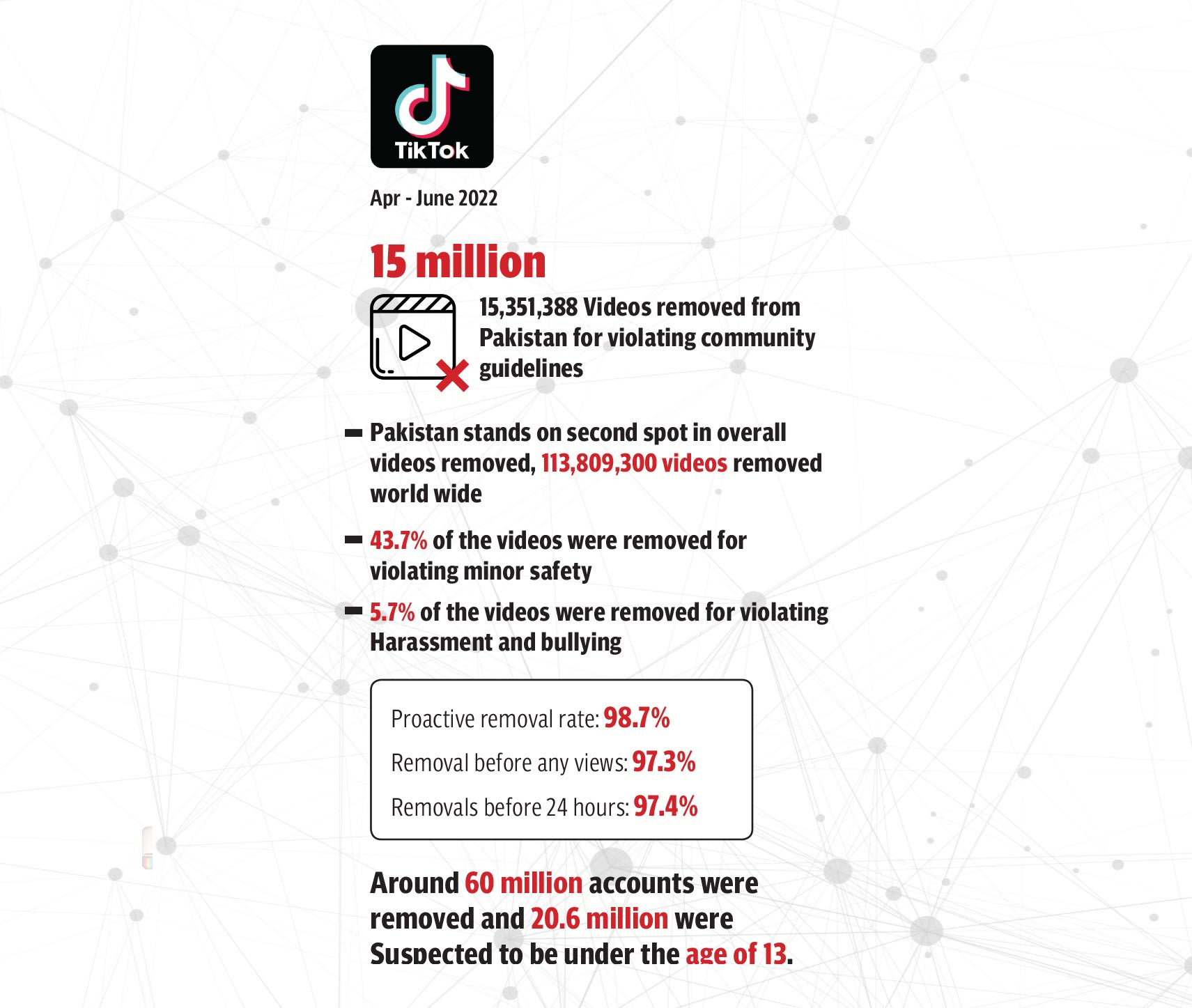

According to the recent community guidelines enforcement report from April to July 2022, TikTok took down 15 million videos from Pakistan that violated community guidelines. This number makes up only one per cent of the total uploaded videos and ranks Pakistan in the second spot in the world for the total number of videos removed from any country. The global number of videos taken down in this period stands at 113 million. Regarding child safety, 43.7% of the videos were removed for violating Minor Safety, and 5.7% were removed for violating harassment and bullying.

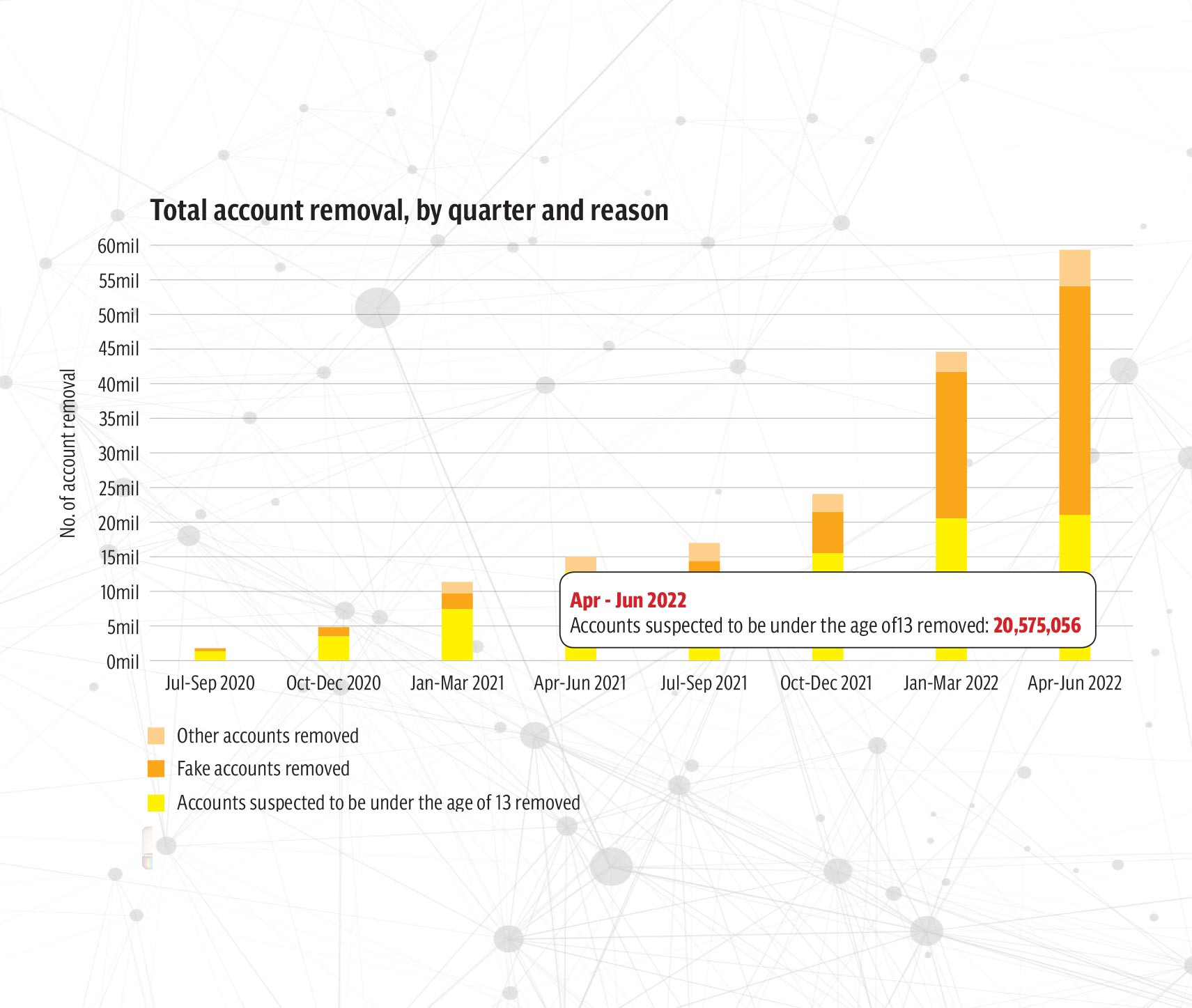

Although the platforms enable the parents and their teens to report if they see something that might violate the Terms of Service or Community Guidelines, the platform was able to proactively remove 98.7% of the videos mentioned above, where 97.4% of videos were removed before 24 hours and 97.3% videos before having any views. Also, around 60 million accounts were removed, and 20.6 million were suspected to be under 13.

“We believe that feeling safe helps users feel comfortable expressing themselves openly and allows creativity to flourish. Our global guidelines are the basis of the moderation policies TikTok’s regional and country teams implement in accordance with local laws and norms. Our teams remove content that violates these guidelines and suspend or ban accounts involved in severe or repeated violations. We update our Community Guidelines from time to time to evolve with community behaviour, mitigate emerging risks, and keep TikTok a safe place for creativity and joy,” said Jiagen of TikTok, which has increased its local human moderator team by 300% for Pakistan.

He added that the platform has made concerted efforts to strengthen its content moderation team in Pakistan, in tandem with advanced technologies, to ensure that objectionable videos that violate its Community Guidelines are taken down immediately. “With the combination of a dedicated team of native Pakistanis who understand the culture and the language and a robust, state-of-the-art, machine learning mechanism that uses automation in the content moderation process, TikTok proactively removes any inappropriate content and terminates accounts that violate its Terms of Service and Community Guidelines, to make the platform a safer and more welcoming space for its Pakistani community.”

The TikTok’s Community Guidelines strictly prohibit posting, sharing, or promoting, harmful or dangerous content, graphic or shocking content, discrimination or hate speech, nudity or sexual activity, child safety infringement, harassment or cyberbullying, impersonation, spam, or other misleading content, intellectual property and workplace content and other malicious activity.

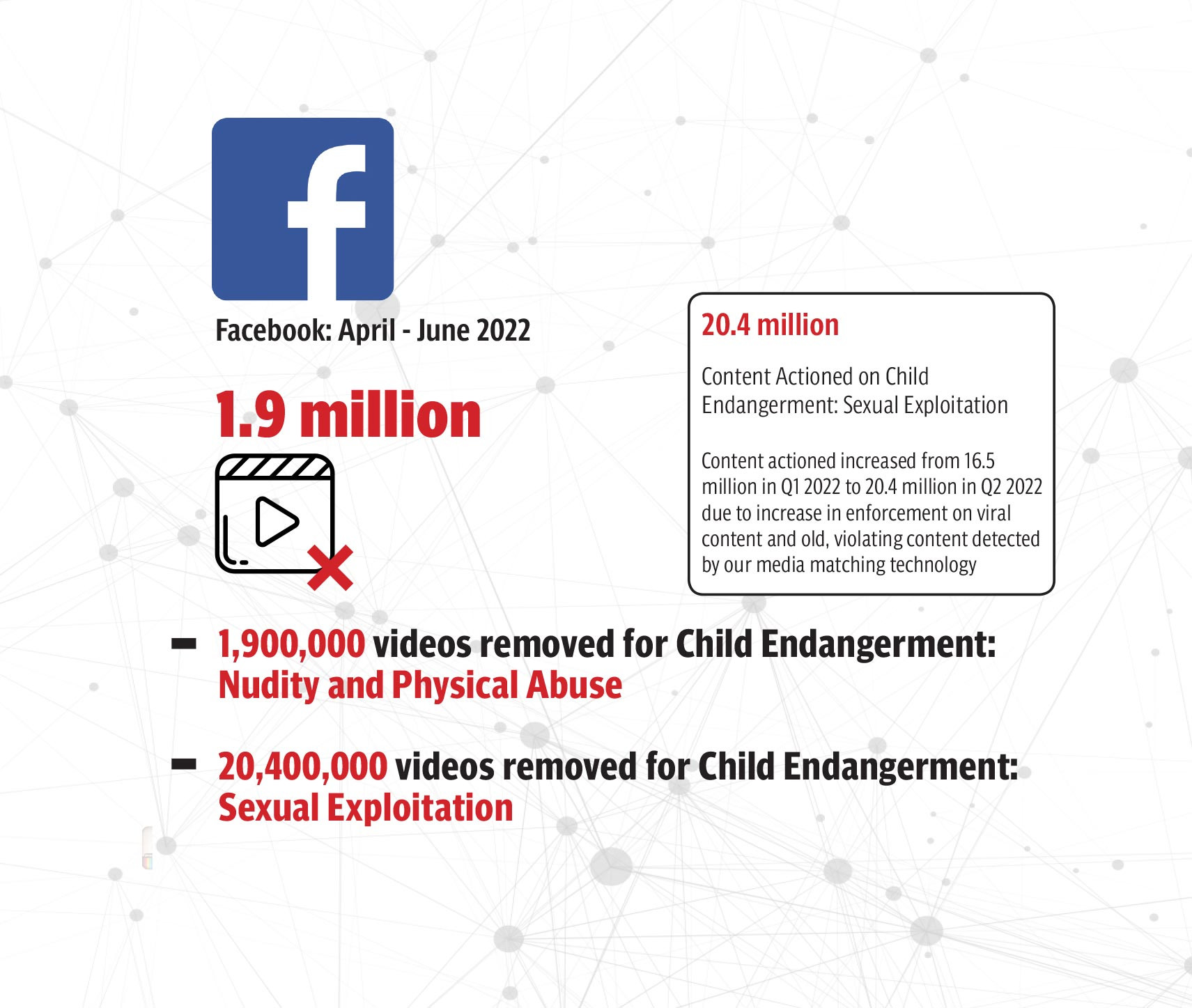

Meanwhile, according to data available for Meta for the same period, a total of 1.9 million videos were removed for Child Endangerment: Nudity and Physical Abuse and 20.4 million videos for Child Endangerment: Sexual Exploitation globally. The number for Pakistan was not shared by the platform.

According to a user Mohib Masroor, who operates one of the leading sports platforms, Khel Shel, there are flaws in the moderation team to detect false or fake accounts. Mohib recently faced an issue where the Facebook page of his platform was hacked, and the ownership of the page was shifted. Mohib runs a sports platform and has an audience that ranges from 13 to 65. Mohib’s content is also also created and shared with this demographic in mind. Recently, a user on Instagram contacted them and in an attempt to scam them, shifted the Facebook page’s ownership. Since then, the hacker has been posting adult content in the stories of the page and linking them to some websites. “This is a clear violation of Meta’s community guidelines. Still, despite reporting and having exchanged emails with the Facebook team, neither I can get the page access back nor restrict the hacker from posting adult content in stories,” he said.

He added that both platforms used for the hacking were of Meta, and despite providing all the proof, they have been unable to offer a resolution for the last five months. “This shows that Pakistan is still a low-priority market for them and doesn’t have any local team to deal with similar issues,” he said, adding that the scammers can quickly contact a child and misuse the account or commit some other online danger.

Facebook was contacted by this correspondent for a comment on this incident however, they declined.

Harmful challenges and reporting

Since the launch of short videos after TikTok, all the other platforms have launched the format considering its popularity. But all good things have a potential downside too. When these short videos became famous for different challenges, some users unwittingly misused them and launched dangerous and harmful challenges. Several dangerous challenges began taking place on social media platforms that led to users becoming injured. However, every user does have the option to report these challenges. Some of the most dangerous TikTok challenges at the moment right now include the NyQuil Chicken trend or the Black Out trend. The former involves taking a swing of Nyquil and then eating a chicken wing. However, while this sounds innocent enough, however the combination of chicken and Nyquil (which contains some alcohol) can be dangerous as it increases the risk of choking. There have been several reports of people passing out and even dying while carrying out the trend.

The blackout trend, as the name suggests, challenges participants to try and make themselves faint by holding their breath and constricting their chest muscles. However, without oxygen, your brain begins to die. Thus resulting, in potentially causing permanent brain injury or even death.

Jiagen of TikTok, while explaining the risk, said that nobody wants their friends or family to get hurt filming a video or trying a stunt. It’s not funny – and since that sort of content is removed, it certainly won’t make you TikTok famous. If you see something questionable online or in real life, please report it!

“As we make clear in our Community Guidelines, we do not allow content that encourages, promotes, or glorifies dangerous challenges that might lead to injury, and we remove reported behaviour or activity that violates our guidelines. To help keep our platform safe, we have introduced a slate of safety features geared towards enhancing our users’ experience, including tools for reporting inappropriate content and managing privacy settings,” said Jaigen.

“Our technology systems and moderation teams have been detecting and removing these clips for violating our policies against content that displays or promotes suicide, and we’re banning accounts that upload them. We appreciate our community members who’ve reported content and warned others against watching, engaging, or sharing such videos on any platform out of respect for the person and their family.”

He added that the users struggling with mental well-being are encouraged to seek support and have access to hotlines directly from the app and in the Safety Center. “We believe better cooperation among tech companies is key to solving these content safety issues that spread across platforms,” Jiagen.

He further informed that TikTok has added a harmful activities section to their minor safety policy to reiterate that content promoting dangerous dares, games, and other acts that may jeopardise the safety of youth is not allowed on TikTok. “We encourage people to be creative and have fun, but not at the expense of an individual’s safety or the safety of others.”

Despite all the moderation, safety features, and community guidelines present on social media platforms, to make the digital space a safe place for our children, as they are the most vulnerable part of society, everyone should take part in reporting any type of content that they feel is not appropriate for their child, along with educating the society about the safe use of the social media applications.